Multi-View Label Prediction for Unsupervised Learning Person Re-Identification

|

Authors

Qingze Yin1, Guanan Wang2, Guodong Ding3, Shaogang Gong4, and Zhenming Tang1

1Nanjing Univerisity of Science and Technology, 2Chinese Academy of Sciences, 3National Univerisity of Singapore, 4Queen Mary University of London

Abstract

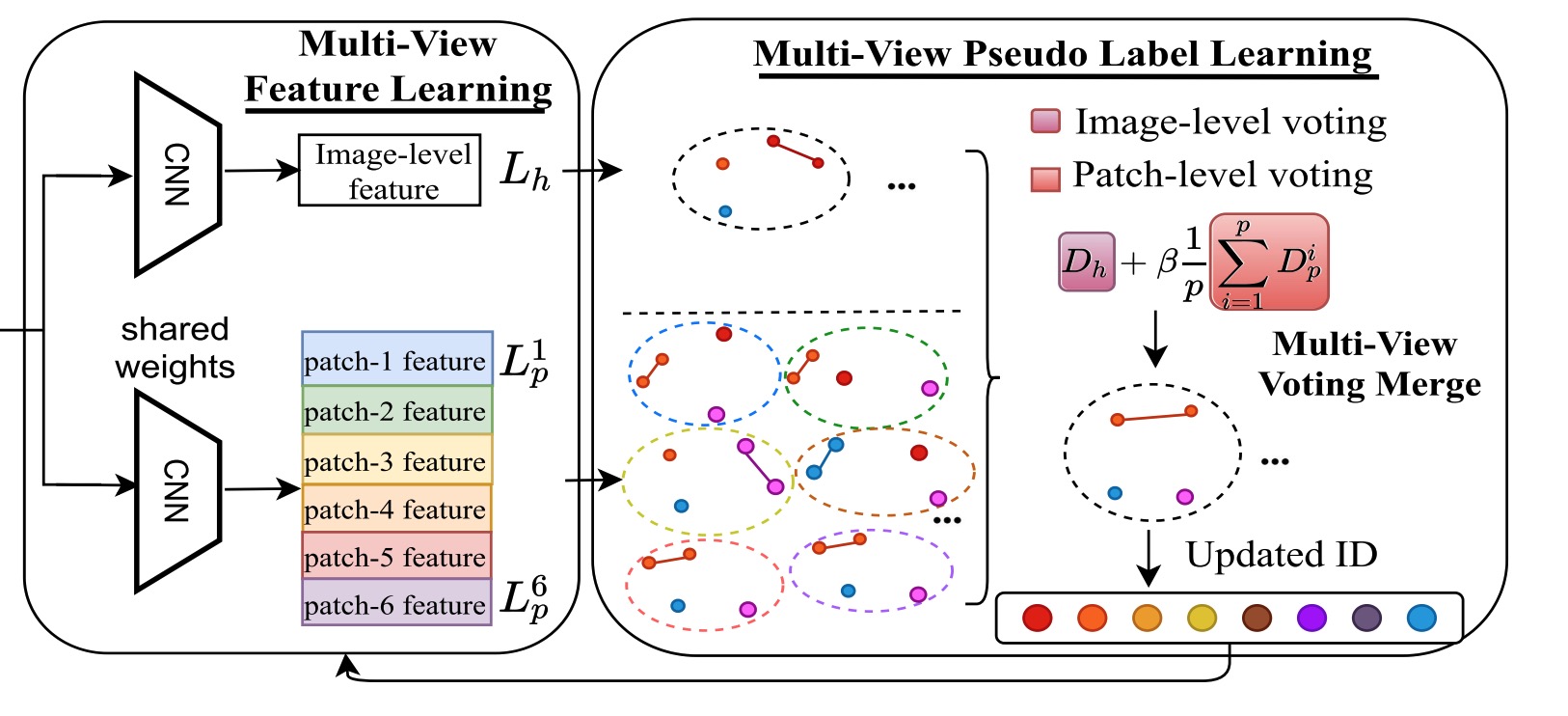

Person re-identification (ReID) aims to match pedestrian images across disjoint cameras. Existing supervised ReID methods utilize deep networks and train them with identitylabeled images, which suffer from limited annotations. Recently, clustering-based unsupervised ReID attracts more and more attention. It first clusters unlabeled images and assigns cluster index to the pseudo-identity-labels, then trains a ReID model with the pseudo-identity-labels. However, considering the slight inter-class variations and significant intra-class variations, pseudo-identitylabels learned from clustering algorithms are usually noisy and coarse. To alleviate the problems above, besides clustering pseudoidentity-labels, we propose to learn pseudo-patch-labels, which brings two advantages: (1) Patch naturally alleviates the effect of backgrounds, occlusions, and carryings since they usually occupy small parts in images, thus overcome noisy labels. (2) It is plausible that patches from different pedestrians belong to the same pseudoidentity-label. For example, pedestrians have a high probability of wearing either the same shoes or pants but a low possibility of wearing both. The experiments demonstrate our proposed method achieves the best performance by a large margin on both imageand video-based datasets.

Resources

Files: [pdf]

Citation:

@article{yin2021multi,

title={Multi-View Label Prediction for Unsupervised Learning Person Re-Identification},

author={Yin, Qingze and Wang, Guanan and Ding, Guodong and Gong, Shaoggang and Tang, Zhenmin},

journal={Signal Processing Letters},

year={2021}

}